-

Disabling old TLS

I last wrote about the incoming change of disabling old versions of TLS. A detail I left off there was deciding when, and how, to do this.

At minimum, your site should at least support the latest version of TLS. As of writing, that’s currently 1.2, with 1.3 hot on its heels.

Who’s Impacted?

When people or organizations start evaluating this, usually the first question that arises is understanding the impact to the users of your site. Disabling old versions of TLS has the unfortunate issue of a poor user experience. There is no way to tell the user “Your version of TLS is not supported, please update” for reason I previously discussed.

Not everyone has the same set of users, either. Certain websites might have a disproportionate amount of traffic that target tech-savvy people, which tend to have more up-to-date operating systems and browsers. Other sites may have most of their traffic coming from users that are less inclined to update their software.

As such, the only way to get a clear picture of an impact to a site is to measure how the site is currently performing. Most web servers or places of terminating TLS (such as a load balancer) can log various aspects of the TLS handshake. The two that are important are the negotiated TLS version, and the negotiated cipher suite. It’s also very beneficial to collect the User-Agent header and IP address as well.

TLS tries to negotiate the highest version that both the client and the server support. If a client negotiates TLSv1.0, then it is very unlikely that it supports TLSv1.2. If 1% of all negotiated handshakes are TLSv1.0, then disabling TLSv1.0 will result in 1% of handshakes failing.

That doesn’t necessarily mean 1% of users would be impacted. Almost all sites (even this one) get crawled. Either legitimately by search indexers, or others simply looking for common website exploits. Eliminating handshake statistics that aren’t from users will give a much clearer picture of the actual impact on users. That doesn’t mean you shouldn’t care about crawlers! Having healthy SEO is important to many web properties, and it’s quite possible crawlers you are targeting don’t support modern versions of TLS. However, the traffic from them can be disproportionate. Most reputable crawlers do support TLSv1.2 however.

Using the IP address and User-Agent header can aide in identifying the source of the handshake. Good crawlers identify themselves with a User-Agent. Less kind crawlers may choose to use an agent string that mimics a browser. For those, you may be able to compare the IP addresses against a list of known spam or bot IP addresses.

If your website performs any kind of user identification, such as signing in, you may be able to even further know that those handshakes and TLS sessions are from a more reputable source that should factor in statistics.

Collecting Statistics

Various different web servers and load balancers support logging common elements of an HTTP request.

Most web servers and load balancers have a common log format called “combined” logging. Which looks like this:

127.0.0.1 - - [01/Jan/2018:23:59:59 -0400] "GET / HTTP/2.0" 200 9000 "-" "<User Agent String>"This breaks down to:

<client ip> - <basic auth user> [<date time>] "<request>" <response code> <response size> "<referer>" "<user agent>"The combined logging format doesn’t include the TLS information, if any. Fortunately, web servers are flexible about what they log and how they log it. You will need something like a flat file parser to be able to query these logs. Alternatively, logging to a central location with syslog or by other means in to a data store that allows querying is very helpful. That way the log file on the server itself can easily be rotated to keep disk usage to a minimum.

Caddy

Caddy’s logging directive is simple and can easily be extended.

log / /var/log/caddy/requests.log "{combined} {tls_protocol} {tls_cipher}"Caddy has many different placeholders of what you can include in your logs.

Note that as of writing, Caddy’s documentation for the TLS version placeholder is incorrect. That documentation indicates the placeholder is

{tls_version}when it is actually{tls_protocol}.Nginx

Nginx has a similar story. Define a log format in the

httpblock using the$ssl_protocoland$ssl_ciphervariables for the TLS version and cipher suite, respectively.log_format combined_tls '$remote_addr - $remote_user [$time_local] ' '"$request" $status $body_bytes_sent ' '"$http_referer" "$http_user_agent" $ssl_protocol $ssl_cipher';Then use the log format in a

serverblock.access_log /var/log/nginx/nginx-access.log combined_tls;Apache

Declare a log format:

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-agent}i\" %{version}c %{cipher}c" combined_tlsThen add

CustomLogdirective to a Server or virtual host block:CustomLog /var/log/apache/apache-access.log combined_tlsWith all that said and done, you’ll have a Combined Log extended with your TLS connection information.

You can also use custom log formats to log in a different format entirely, such as JSON. Using a JSON log format and ingesting it elsewhere such as NoSQL DB or any other query engine that is friendly to JSON.

Trials

A good idea that some sites that have already disabled old TLS have done is a “brown-out”. GitHub disabled TLSv1.0 and 1.1 for just one hour. This helped people identify their own browsers or software that was incompatible while only temporarily breaking them. Presumably the idea was to break people temporarily so they would know something is wrong and find documentation about TLS errors they started getting. Things would get working again while those people or teams worked to deploy new versions of software that supported TLSv1.2.

CloudFlare is doing the same thing for their APIs, except the duration is for a whole day.

I like this idea, and would encourage people to do this for their web applications. Keep support teams and social media aware of what is going on so they can give the best response, and have plenty of documentation.

Unfortunately for the case of GitHub, I would say their one hour window was probably a little too small to capture global traffic. A whole day might be too long for others as well. Consider both of these options, or others such as multiple one hour periods spaced out over a 24 or 48 hour period. Geography tends to play an important role in what versions of browsers and software are deployed. In China, for example, Qihoo 360 browser is very popular. You wouldn’t get representative sample of Qihoo’s traffic during the day in the United States.

Since we mentioned logging, be sure to log failed handshakes because a protocol or cipher suite couldn’t be agreed upon during the brown out period.

Beyond the Protocol

Many are focused on TLSv1.2, but making 1.2 the minimum supported TLS version gives us other opportunities to improve.

You could consider using an ECDSA certificate. Most browsers that support TLS 1.2 also support ECDSA certificates. The only broad exception I can think of is Chrome on Windows XP. Chrome on XP supports TLSv1.2, but uses the operating system to validate and build a certificate path. Windows XP does not support ECDSA certificates.

Other browsers, like Internet Explorer, won’t work on Windows XP anyway because they don’t support TLSv1.2 in the first place. Firefox uses NSS for everything, so ECDSA works on Windows XP as well.

ECDSA has a few advantages. The first is smaller certificates. Combined with a if ECDSA is used through the certificate chain as much as possible, this saves hundreds of precious bytes in the TLS handshake. It’s also for the most part more secure than RSA. RSA still widely uses PKCS#1.5 padding for use in TLS. ECDSA avoids this problem entirely.

Lastly, as mentioned previously, consider the cipher suites, both the key agreement as well as the symmetric algorithm. FF-DHE shouldn’t be used, and is widely disabled in clients for now. Key generation is slow, and the parameter configuration is sometimes wrong. Best to avoid DHE entirely and stick with ECDHE. Also consider if static key exchange is needed at all. There are few browsers out there that support TLSv1.2 but not ECDHE. That might not be true of non-browser software. This again goes back to measuring with your traffic.

Remove every symmetric algorithm except AES-GCM, ChaCha, and AES-CBC. At minimum, TLSv1.2 requires

TLS_RSA_WITH_AES_128_CBC_SHA. That doesn’t include ECDHE, but it does mean that 3DES, RC4, CAMILLA, etc. shouldn’t be bothered with anymore. The order is generally important. AEAD suites should be placed first, while AES-CBC should be placed last.Conclusions

Having done these experiments with a few small and medium sized sites, I’m optimistic of disabling old versions of TLS. Particularly, I see little need to support TLSv1.1. Almost everything that supports TLSv1.1 also supports 1.2 as well, and leaving 1.1 enabled doesn’t accomplish too much.

Since we are removing support from a lot of legacy browsers by supporting TLSv1.2 as a minimum, we can also consider other areas of improvement such as ECDSA for smaller, more secure, certificates, and cleaning up the list of supported cipher suites.

-

Time to disable old TLS

There has been discussion for a few years about the eventual deprecation of TLS 1.0. The deprecation of TLS 1.0 is interesting, and perhaps a little exciting to me, and will be quite different from when SSL 3 was widely disabled.

TLS 1.0 is old. 1999 old - it’s been almost twenty years. That’s rather impressive for a security protocol. There have been additions to it over the years, such as adding more modern cipher suites to it, such as AES.

TLS 1.0 has not been without problems though. There have been various breakages of it over the past few years. While POODLE was widely known as an attack against SSL 3, it did affect certain implementations of TLS 1.0. BEAST is another such breakage. BEAST has been worked around by clients by using 1/n-1 record splitting, however it is unfixable at the protocol without breaking compatibility. A naive client will continue to be vulnerable to such an issue. TLS 1.0 also makes use of older algorithms that cannot be changed, such as using MD5 and SHA1 in the PRF when computing the master secret.

As a result, there has been a call to deprecate TLS 1.0. That time is finally here, not just for those that like being on the bleeding edge of security. This has been a long time coming, and it won’t be without difficulty for some users and site owners.

TLS 1.2 is newer, and unfortunately had slow uptake. While TLS 1.2 was specified in 2008, it didn’t see wide deployment for a few years later. Android is an example, which gained support in version 4.0 in late 2011. Only the latest version of Internet Explorer, version 11, has it enabled by default. MacOS 10.9 was the first MacOS version to support TLS 1.2, released in October 2013 (curiously, iOS 5 for the iPhone got TLS 1.2 in 2011, much sooner than MacOS). You can see a full list of common clients and their TLS 1.2 support from SSL Labs’ Site.

Due to POODLE, SSL 3 was widely disabled, both from clients and servers starting around 2014. TLS 1.0 had 14 years to work its way in to software and in to consumer products. TLS 1.2 on the other hand, has had less time. The other thing that’s rather recent is the explosion of internet connected consumer devices. Unlike desktop computers, these devices tend to have a much more problematic software update schedule, if one exists at all.

Putting all of this together, turning TLS 1.0 off is likely to cause a much more noticable impact on connectivity. However, this leads to better user safety. Many big players have already announced their plans to disable TLS 1.0. The most notable upcoming one is all organizations that need to be PCI compliant. PCI 3.2 stipulates the eventual shut off of “early TLS”, which is TLS 1.0 and SSL in this case. The looming date is June 30th, 2018. This will impact every website that takes a credit or debit card.

After June 30, 2018, all entities must have stopped use of SSL/early TLS as a security control, and use only secure versions of the protocol (an allowance for certain POS POI terminals is described in the last bullet below)

PCI originally wanted this done in June 2016, however it became quickly apparent that many organizations would not be able to meet this goal when PCI 3.1 was announced. Thus, 3.2 extended it by two years, however required companies to put together a risk mitigation and migration plan up until June 2018.

Many are wondering what they should do about TLS 1.1. Some organizations are simply turning off TLS 1.0, and leaving 1.1 and 1.2 enabled. Other are turning off both 1.0 and 1.1, leaving 1.2 as the only option. In my experience, almost all clients that support TLS 1.1 also support 1.2. There are few browsers that will benefit from having TLS 1.1 enabled since they also support 1.2 in their default configuration. However, the only way to know for certain is to measure based on your needs. TLS 1.1 also shares several character flaws with TLS 1.0.

A final note would be to tighten down the available cipher suites. TLS 1.2 makes

TLS_RSA_WITH_AES_128_CBC_SHAa mandatory cipher suite - there is little reason to have 3DES enabled if your site is going to be TLS 1.2 only. Also ensure that AEAD suites are prioritized first, such as AES-GCM or CHACHA. My preferred suite order might be something like this:- ECDHE-ECDSA/RSA-AES128-GCM-SHA256

- ECDHE-ECDSA/RSA-AES256-GCM-SHA384

- ECDHE-ECDSA/RSA-WITH-CHACHA20-POLY1305

- ECDHE-ECDSA/RSA-AES128-CBC-SHA

- ECDHE-ECDSA/RSA-AES256-CBC-SHA

You can of course move things around to better suit your needs or customers. Some prefer putting AES-256 in front of AES-128 for better security over performance. The exact ones I use on this site are my Caddyfile.

-

Generating .lib with Rust build scripts

Something I’ve been working on in my spare time is porting Azure SignTool to Rust. I’ve yet to make up mind if Rust is the one-true way forward with that, but that’s a thought for another day.

I wanted to check out the feasibility of it. I’m happy to say that I think all of the concepts necessary are there, they just need to be glued together.

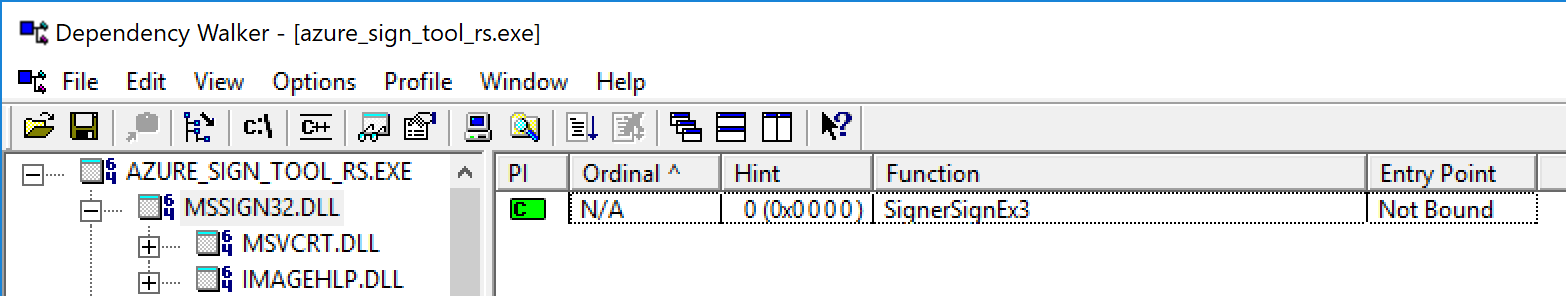

One roadblock with Azure SignTool is that it needs to use an API,

SignerSignEx3, which isn’t included in the Windows SDK. In fact, just about nothing inmssign32is in the Windows SDK. Not being in the Windows SDK means no headers, and no .lib to link against.For .NET developers, no .lib for linking hasn’t really mattered when consuming Win32 APIs. It simply needs the ordinal or name of the export and the CLR takes care of the rest with platform invoke. For languages like C that use a linker, you need a .lib to link against. Rust is no different.

For most cases, the

winapicrate has all of the Win32 functions you need. It’s only in the case of APIs that are not in the Windows SDK (or likeSignerSignEx3, entirely undocumented) that an API will not be in the crate.We need to call

SignerSignEx3without something to link against. We have a few different options.- Use

LoadLibrary(Ex)andGetProcAddress. - Make our own .lib.

The latter seemed appealing because then the Rust code can continue to look clean.

#[link(name = "mssign32")] extern { fn SignerSignEx3(...) }Making a .lib that contains exports only is not too difficult. We can define our own .def file like so:

LIBRARY mssign32 EXPORTS SignerSignEx3and use

lib.exeto convert it to a linkable lib file:lib.exe /MACHINE:X64 /DEF:mssign32.def /OUT:mssign32.libIf we put this file somewhere that the Rust linker can find it, our code will compile successfully and we’ll have successfully linked.

I wasn’t thrilled about the idea of checking in an opaque binary in to source for building, so I sought an option to make it during the rust build process.

Fortunately, cargo makes that easy with build scripts. A build script is a rust file itself named

build.rsin the same directory as yourCargo.tomlfile. It’s usage is simple:fn main() { // Build script }Crucially, if you write to stdout using

println!, the build process will recognize certain output as commands to modify the build process. For example:println!("cargo:rustc-link-search={}", "C:\\foo\\bar");Will add a path for the linker to search. We can begin to devise a plan to make this part of a build. We can in our build call out to

lib.exeto generate a .lib to link against, shove it somewhere, and add the directory to to linker’s search path.The next trick in our build script will be to find where

lib.exeis. Fortunately, the Rust toolchain already solves this since it relies onlink.exefrom Visual Studio anyway, so it knows how to find SDK tooling (which move all over the place between Visual Studio versions). Thecccrate makes this easy for us.let target = env::var("TARGET").unwrap(); let lib_tool = cc::windows_registry::find_tool(&target, "lib.exe") .expect("Could not find \"lib.exe\". Please ensure a supported version of Visual Studio is installed.");The

TARGETenvironment variable is set by cargo and contains the architecture the build is for, since Rust can cross-compile. Conveniently, we can use this to support cross-compiled builds ofazure_sign_tool_rsso that we can make 32-bit builds on x64 Windows and x64 builds on 32-bit Windows. This allows us to modify the/MACHINEargument for lib.exe.I wrapped that up in to a helper in case I need to add additional libraries.

enum Platform { X64, X86, ARM64, ARM } impl std::fmt::Display for Platform { fn fmt(&self, f: &mut std::fmt::Formatter) -> std::fmt::Result { match *self { Platform::X64 => write!(f, "X64"), Platform::X86 => write!(f, "X86"), Platform::ARM => write!(f, "ARM"), Platform::ARM64 => write!(f, "ARM64"), } } } struct LibBuilder { pub platform : Platform, pub lib_tool : cc::Tool, pub out_dir : String } impl LibBuilder { fn new() -> LibBuilder { let target = env::var("TARGET").unwrap(); let out_dir = env::var("OUT_DIR").unwrap(); let platform = if target.contains("x86_64") { Platform::X64 } else if target.contains("ARM64") { Platform::ARM64 } else if target.contains("ARM") { Platform::ARM } else { Platform::X86 }; let lib_tool = cc::windows_registry::find_tool(&target, "lib.exe") .expect("Could not find \"lib.exe\". Please ensure a supported version of Visual Studio is installed."); LibBuilder { platform : platform, lib_tool : lib_tool, out_dir : out_dir } } fn build_lib(&self, name : &str) -> () { let mut lib_cmd = self.lib_tool.to_command(); lib_cmd .arg(format!("/MACHINE:{}", self.platform)) .arg(format!("/DEF:build\\{}.def", name)) .arg(format!("/OUT:{}\\{}.lib", self.out_dir, name)); lib_cmd.output().expect("Failed to run lib.exe."); } }Then our build script’s main can contain this:

fn main() { let builder = LibBuilder::new(); builder.build_lib("mssign32"); println!("cargo:rustc-link-search={}", builder.out_dir); }After this, I was able to link against

mssign32.Note that, since this entire project is Windows’s specific and has zero chance of running anywhere, I did not bother to decorate anything with

#[cfg(target_os = "windows")]. If you are attempting to make a cross-platform project, you’ll want to account for all of this in the Windows-specific parts.With this, I now only need to check in a

.deftext file and Cargo will take care of the rest. - Use

-

Caddy

This is my first post with my blog running Caddy. In short, it’s a web server with a focus on making HTTPS simple. It accomplishes this by supporting ACME out of the box. ACME is the protocol that Let’s Encrypt uses. Technically, Caddy supports any Certificate Authority that supports ACME. Practically, few besides Let’s Encrypt do, though I am aware of other CAs making an effort to support issuance with ACME.

Though I’ve seen lots of praise for Caddy and its HTTPS ALL THE THINGS mantra for a while now, I never really dug in to it until recently. I was actually grabbed by several of its other features that I really liked.

Configuration is simple. That isn’t always a good thing. Simple usually means advanced configuration or features is lost in the trade off. Fortunately, this does not seem to be the case with Caddy, for me. I am sure it may be for others. When evaluating Caddy, there were a number of things nginx was taking care of besides serving static content.

- Rewrite to WebP if the user agent accepts WebP.

- Serve pre-compressed gzip files if the user agent accepts it.

- Serve pre-compressed brotli files if the user agent accepts it.

- Take care of some simple redirects.

- Flexible TLS configuration around cipher suites, protocols, and key exchanges.

Caddy does all of those. It also does them better. Points two and three Caddy just does. It’ll serve gzip or brotli if the user agent is willing to accept them if a pre-compressed version of the file is on disk.

Rewriting to WebP was easy:

header /images { Vary Accept } rewrite /images { ext .png .jpeg .jpg if {>Accept} has image/webp to {path}.webp {path} }The configuration does two things. First, it adds the

Vary: Acceptheader to all responses under/images. This is important if a proxy or CDN is caching assets. The second part says, if theAcceptheader contains “image/webp”, rewrite the response to “{path}.webp”, so it will look for “foo.png.webp” if a browser requests “foo.png”. The second{path}means use the original if there is no webp version of the file. Nginx on the other hand, was a bit more complicated.HTTPS / TLS configuration is simple and well documented. As the documentation points out, most people don’t need to do anything other than enable it. It has sensible defaults, and will use Let’s Encrypt to get a certificate.

I’m optimistic about Caddy. I think it’s a very nice web server / reverse proxy. I spent about an hour moving my 400 lines of nginx configuration to 51 lines of Caddy configuration.

I’d recommend giving it a shot.

-

Azure SignTool

A while ago, Claire Novotny and I started exploring the feasibility of doing Authenticode signing with Azure Key Vault. Azure Key Vault lets you do some pretty interesting things, including which lets you treat it as a pseudo network-attached HSM.

A problem with Azure Key Vault though is that it’s an HTTP endpoint. Integrating it in to existing standards like CNG or PKCS#11 hasn’t been done yet, which makes it difficult to use in some cases. Specifically, tools that wanted to use a CSP or CNG provider, like Authenticode signing.

Our first attempt at getting this working was to see if we could use the existing signtool. A while ago, I wrote about using some new options in signtool that let you sign the digest with whatever you want in my post Custom Authenticode Signing.

This made it possible, if not a little unwieldy, to sign things with Authenticode and use Azure Key Vault as the signing source. As I wrote, the main problem with it was you needed to run signtool twice and also develop your own application to sign a file with Azure Key Vault. The steps went something like this.

- Run signtool with

/dgflag to produce a base64-encoded digest to sign. - Produce signature for that file using Azure Key Vault using custom tool.

- Run signtool again with

/dito ingest the signature.

This was, in a word, “slow”. The dream was to be able to produce a signing service that could sign files in bulk. While a millisecond or two may not be the metric we care about, this was costing many seconds. It also let us feeling like the solution was held together by shoestrings and bubblegum.

/dlib

However, signtool mysteriously mentions a flag called

/dlib. It says it combines/dgand/diin to a single operation. The documentation, in its entirety, is this:Specifies the DLL implementing the AuthenticodeDigestSign function to sign the digest with. This option is equivalent to using SignTool separately with the /dg, /ds, and /di switches, except this option invokes all three as one atomic operation.

This lacked a lot of detail, but it seems like it is exactly what we want. We can surmise though that the value to this flag is a path to a library that exports a function called

AuthenticodeDigestSign. That is easy enough to do. However, it fails to mention what is passed to this function, or what we should return to it.This is not impossible to figure out if we persist with

windbg. To make a long story short, the function looks something like this:HRESULT WINAPI AuthenticodeDigestSign( CERT_CONTEXT* certContext, void* unused, ALG_ID algId, BYTE* pDigestToSign, DWORD cDigestToSign, CRYPTOAPI_BLOB* signature );With this, it was indeed possible to make a library that

signtoolwould call this function for signing the digest. Claire put together a C# library that did exactly that on GitHub under KeyVaultSignToolWrapper. I even made some decent progress on a rust implementation.This was a big improvement. Instead of multiple invocations to signtool, we can do this all at once. This still presented some problems though. The first being that there was no way to pass any configuration to it with signtool. The best we could come up with was to wrap the invocation of signtool and set environment variables in the signtool process, and let this get its configuration from environment variables, such as which vault to authenticate to, and how to authenticate. A final caveat was that this still depended on signtool. Signtool is part of the Windows SDK, which technically doesn’t allow us to distribute it in pieces. If we wanted to use signtool, we would need to install parts of the entire Windows SDK.

SignerSignEx3

Later, I noticed that Windows 10 includes a new signing API,

SignerSignEx3. I happened upon this when I was using windbg inAuthenticodeDigestSignand saw that the caller of it wasSignerSignEx3, not signtool. I checked out the exports inmssign32and did see it as a new export starting in Windows 10. The natural conclusion was that Windows 10 was shipping a new API that is capable of using callbacks for signing the digest and signtool wasn’t doing anything special.As you may have guessed,

SignerSignEx3is not documented. It doesn’t exist in Microsoft Docs or in the Windows SDK headers. Fortunately,SignerSignEx2was documented, so we weren’t starting from scratch. If we figured outSignerSignEx3, then we could skip signtool completely and develop our own tool that does this.SignerSignEx3looks very similar toSignerSignEx2:// Not documented typedef HRESULT (WINAPI *SignCallback)( CERT_CONTEXT* certContext, PVOID opaque, ALG_ID algId, BYTE* pDigestToSign, DWORD cDigestToSign, CRYPT_DATA_BLOB* signature ); // Not documented typedef struct _SIGN_CALLBACK_INFO { DWORD cbSize; SignCallback callback; PVOID opaque; } SIGN_CALLBACK_INFO; HRESULT WINAPI SignerSignEx3( DWORD dwFlags, SIGNER_SUBJECT_INFO *pSubjectInfo, SIGNER_CERT *pSignerCert, SIGNER_SIGNATURE_INFO *pSignatureInfo, SIGNER_PROVIDER_INFO *pProviderInfo, DWORD dwTimestampFlags, PCSTR pszTimestampAlgorithmOid, PCWSTR pwszHttpTimeStamp, PCRYPT_ATTRIBUTES psRequest, PVOID pSipData, SIGNER_CONTEXT **ppSignerContext, PCERT_STRONG_SIGN_PARA pCryptoPolicy, SIGN_CALLBACK_INFO *signCallbackInfo, PVOID pReserved );Reminder: These APIs are undocumented. I made a best effort at reverse engineering them, and to my knowledge, function. I do not express any guarantees though.

There’s a little more to it than this. First, in order for the callback parameter to even be used, there’s a new flag that needs to be passed in. The value for this flag is

0x400. If this is not specified, thesignCallbackInfoparameter is ignored.The usage is about what you would expect. A simple invocation might work like this:

HRESULT WINAPI myCallback( CERT_CONTEXT* certContext, void* opaque, ALG_ID algId, BYTE* pDigestToSign, DWORD cDigestToSign, CRYPT_DATA_BLOB* signature) { //Set the signature property return 0; } int main() { SIGN_CALLBACK_INFO callbackInfo = { 0 }; callbackInfo.cbSize = sizeof(SIGN_CALLBACK_INFO); callbackInfo.callback = myCallback; HRESULT blah = SignerSignEx3(0x400, /*omitted*/ callbackInfo, NULL); return blah; }When the callback is made, the

signatureparameter must be filled in with the signature. It must be heap allocated, but it can be freed after the call toSignerSignEx3completes.APPX

We’re not quite done yet. The solution above works with EXEs, DLLs, etc - it does not work with APPX packages. This is because signing an APPX requires some additional work. Specifically, the APPX Subject Interface Package requires some additional data be supplied in the

pSipDataparameter.Once again we are fortunate that there is some documentation on how this works with

SignerSignEx2, however the details here are incorrect forSignerSignEx3.Unfortunately, the struct shape is not documented for

SignerSignEx3.To the best of my understanding,

SIGNER_SIGN_EX3_PARAMSstructure should look like this:typedef _SIGNER_SIGN_EX3_PARAMS { DWORD dwFlags; SIGNER_SUBJECT_INFO *pSubjectInfo; SIGNER_CERT *pSigningCert; SIGNER_SIGNATURE_INFO *pSignatureInfo; SIGNER_PROVIDER_INFO *pProviderInfo; DWORD dwTimestampFlags; PCSTR pszTimestampAlgorithmOid; PCWSTR pwszTimestampURL; CRYPT_ATTRIBUTES *psRequest; SIGN_CALLBACK_INFO *signCallbackInfo; SIGNER_CONTEXT **ppSignerContext; CERT_STRONG_SIGN_PARA *pCryptoPolicy; PVOID pReserved; } SIGNER_SIGN_EX3_PARAMS;If you’re curious about the methodology I use for figuring this out, I documented the process in the GitHub issue for APPX support. I rarely take the time to write down how I learned something, but for once I managed to think of my future self referring to it. Perhaps that is worthy of another post on another day.

Quirks

SignerSignEx3with a signing callback seems to have one quirk: it cannot be combined with theSIG_APPENDflag, so it cannot be used to append signatures. This seems to be a limitation ofSignerSignEx3, assigntoolhas the same problem when using/dlibwith the/asoption.Conclusion

It’s a specific API need, I’ll give you that. However, combining this with Subject Interface Packages, Authenticode is extremely flexible. Not only what it can sign, but now also how it signs.

AzureSignTool’s source is on GitHub, MIT licensed, and has C# bindings.

- Run signtool with